Intro

We:

Please write a poem about functional programming. You may only use words without the letter

e

ChatGPT:

A world of logic, calm, and bright, No looping trap, no shifting night. All functions pure, all thoughts align, A flow of truth, so crisp, so fine.

No changing forms, no global sin, Just input out, calm soul within. A map, a fold, a lambda song, With currying paths that hum along.

In stat’s soft calm, I find my art, No moving part, no tangled heart. A call, a form, a tidy plan — Thus code is math, and math is man.

We:

Billion dollar machine, are you kidding us?

In their defense, LLMs just don't have a concept of an e, or even letters in

general,

they only see tokens.

But still. You would expect better from one of the best commercial models that

is available at the time of writing.

But Why is that?

Let's have a brief look at how LLMs produce their output.

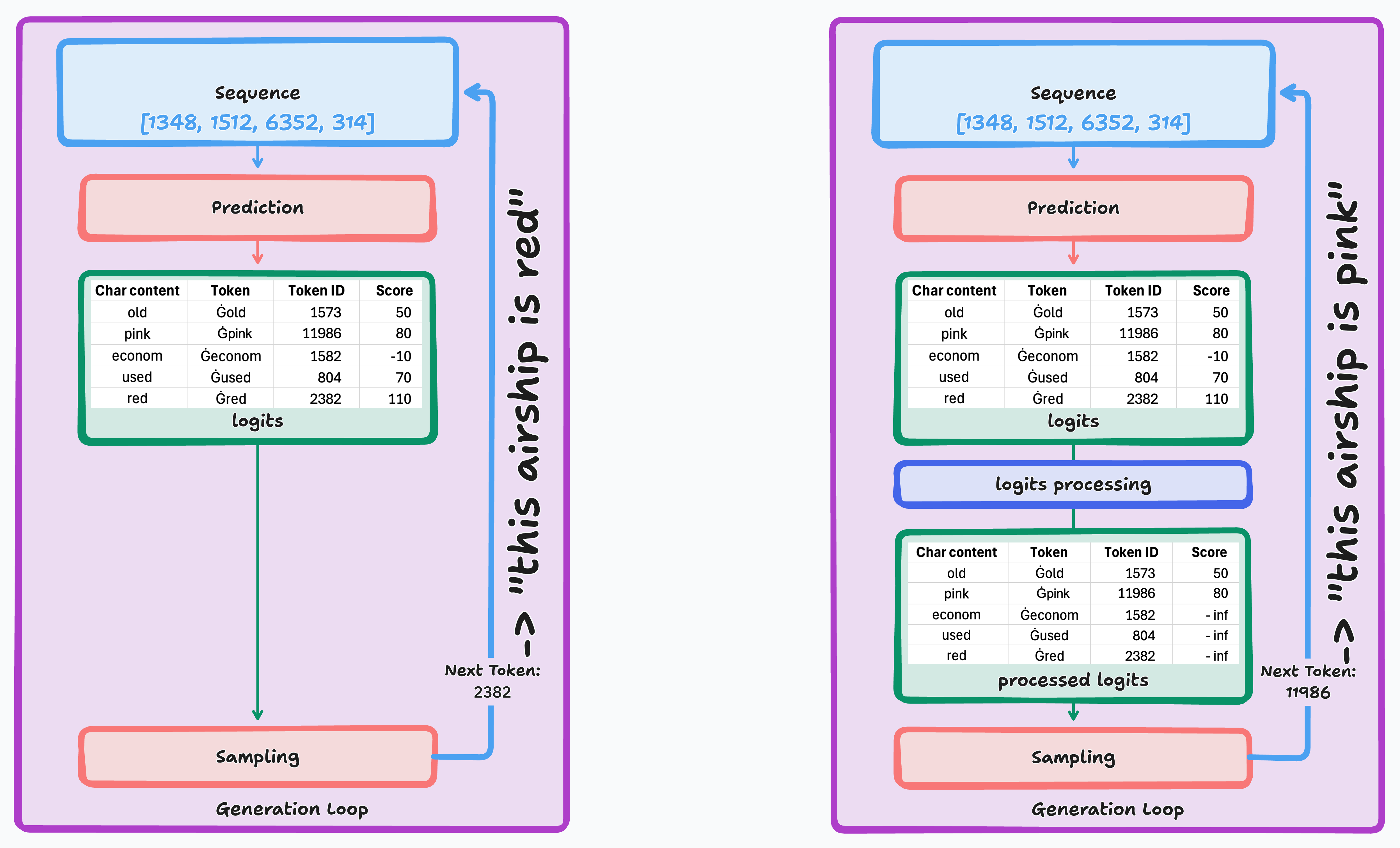

Generation Loop

As said, LLMs don't work with the letter or word concepts that we are used to. Even though they focus on natural language, they actually work with tokens.

Whenever you talk to an LLM, the flow is as follows:

-

You provide an input, e.g. "Write a poem about an airship". Usually a system prompt will be prepended and your input will be inserted into a model specific template. Also other things, like RAG or context optimisation happen, but we will ignore all of that for now: It doesn't make a difference for our example.

-

The input is converted into token ids, using a tokenizer. Different LLMs require different tokenizers, which speak a different vocabulary. In general different tokenizers transform the same text into different numbers.

Here is the output corresponding to "hello reader" for OpenAI's gpt-4o model, according to tiktokenizer:

24912, 10958In this case

24912represents the token "hello", and10958represents the token " reader" (note the leading space).Together, these two numbers represent your input.

Also note that there is not always a 1-to-1 match between a word and a token id, often a word gets split into multiple tokens.

-

The generation loop starts, every round follows the same steps.

We take all token ids known so far and pass them to the LLM. In the first round that would be only the token ids of the input (

24912, 10958).For each token in its vocabulary, the LLM calculates a logit. A logit is a number that represents how confident the LLM is that the corresponding token should be the next token.

In our example, it could assign a logit of 20 to the token id that represents

!and a logit of 1 to the token id representing the token " dog". That would mean that according to the LLM there is a 20 times higher confidence that the input should be followed by "!" ("hello reader!") than " dog" ("hello reader dog").Token ... "!" ... " dog" ... Token ID ... 17 ... 6446 ... Logit ... 20 ... 1 ... -

The logits are processed and transformed, depending on some sort of configuration.

-

The next token id is determined based on the logits.

We append the token id to the previous tokens, then we continue with our loop (at step 3) until we meet an exit condition and stop.

Hooking into the Generation

When we work with a given LLM, most of the steps listed in the previous section are fixed. Data scientists and researchers allegedly change tokenizers and model weights, we as software developers do not.

Assuming model and the corresponding tokenizer to be fixed, our spot to influence the generation is in step 4, when the logits are processed. While we can't change the fundamental capabilities of the system at this place, we can nevertheless make very powerful changes to the generated output. And in particular, we can make changes that bring us the reliability and determinism that we need when building software.

Let's look at our example from the introduction.

Remember, we want ChatGPT to write a poem, but don't use any word that contains the letter e.

Now, we don't have access to ChatGPT's underlying model, but it follows the same steps outlined in the previous section.

As such, it starts with our prompt "Please write a poem about functional programming. You may only use words without the letter e".

This input is translated into token ids using a tokenizer.

The LLM generates a logit for each of the tokens in its vocabulary, then those logits are processed, the next token is determined, and repeat the loop.

Let's say we already generated the following output:

A world of logic, calm, and bright, No looping trap, no shifting night. All functions

From the introduction, we know that ChatGPT picked " pure" as the next token.

A world of logic, calm, and bright, No looping trap, no shifting night. All functions pure

As we don't allow any word containing an e, we want to prevent that.

The generation loop proceeds, the LLM receives all previous token ids and calculates a logit for each of the tokens in its vocabulary, including one for the token " pure".

| Token | ... | " pure" | ... |

|---|---|---|---|

| Token ID | ... | 14147 | ... |

| Logit | ... | 42 | ... |

Remember, the logit represents the confidence of the LLM that pure should be the next token.

We know that ChatGTP picked pure, so we know that the corresponding logit must have been a large number in comparison to the other logits.

This is our chance to influence the generated output.

We hook into step 4, the processing of the logits.

What we receive is a logit for each token, and ... we just manually change the confidence to the lowest number we can find (-infinity), overriding the calculations of the LLM.

| Token | ... | " pure" | ... |

|---|---|---|---|

| Token ID | ... | 14147 | ... |

| Logit | ... | -infinity | ... |

And that's it, when we determine the next token in the next step " pure" will look like a bad candidate because the logit is so small. There will be other tokens with larger logits which will therefore appear as way better candidates, and one of them will be selected.

As we don't allow any token that contains an e, there is a whole number of tokens for which we have to update the logit to a very small number, but this is the underlying mechanism of structured generation.

Logits Processors in Bumblebee

Bumblebee actually comes with a whole list of logits processors that do exactly that, transforming the logits calculated by the LLM before choosing the next token.

We don't have to go into the details of each of them, but here is the list taken from lib/bumblebee/text/generation.ex.

The processors are included in the list when you pass certain configuration options.

At the end of the list there is an option to pass user defined logits_processors.

# lib/bumblebee/text/generation.ex#L349 ff

processors =

[

if config.no_repeat_ngram_length && config.no_repeat_ngram_length > 0 do

&no_repeat_ngram_processor(&1, &2, ngram_length: config.no_repeat_ngram_length)

end,

if min_length_fun && config.eos_token_id do

&min_length_processor(&1, &2,

min_length_fun: min_length_fun,

eos_token_ids: List.wrap(config.eos_token_id)

)

end,

if config.suppressed_token_ids != [] do

&suppressed_tokens_processor(&1, &2, suppressed_token_ids: config.suppressed_token_ids)

end,

if config.forced_bos_token_id do

&bos_token_processor(&1, &2, bos_token_id: config.forced_bos_token_id)

end,

if config.forced_eos_token_id do

&eos_token_processor(&1, &2, eos_token_id: config.forced_eos_token_id)

end,

if config.forced_token_ids do

&forced_tokens_processor(&1, &2, forced_token_ids: config.forced_token_ids)

end,

if config.temperature && config.temperature != 1.0 do

&temperature_processor(&1, &2, temperature: config.temperature)

end

] ++

if config.strategy.type == :multinomial_sampling do

[

if top_k = config.strategy[:top_k] do

&top_k_processor(&1, &2, top_k: top_k)

end,

if top_p = config.strategy[:top_p] do

&top_p_processor(&1, &2, top_p: top_p)

end

]

else

[]

end ++ logits_processors

To explore how logits processors in Bumblebee work, let's look at the suppressed_tokens_processor.

This processor transforms the logits in a way that suppresses selected tokens, exactly what we need to forbid any tokens that contain the letter e.

Here is the complete code, note that it's a deftransform which is a special kind of function to work with defn expressions.

For this blog post you can ignore that and just see it as a regular Elixir function.

We will talk about each line of code individually in the following paragraph.

# lib/bumblebee/text/generation/logits_processing.ex#L6 ff

deftransform suppressed_tokens_processor(logits, _context, opts \\ []) do

opts = Keyword.validate!(opts, [:suppressed_token_ids])

indices = opts[:suppressed_token_ids] |> Nx.tensor() |> Nx.new_axis(-1)

values = Nx.broadcast(Nx.Constants.neg_infinity(Nx.type(logits)), {Nx.size(indices)})

Nx.indexed_put(logits, indices, values)

end

Each logits processor receives the logits, which is just a 1 dimensional tensor (the logit for the token at the index that corresponds to the token_id).

| Token ID | 0 | 1 | ... | 51227 |

|---|---|---|---|---|

| Logit | 2.3 | 4.22 | ... | 3.67 |

Additionally, we get a context which contains information about the current state of the generation such as the length of the input or the complete length of the generation so far.

The suppressed_tokens_processor simply ignores context.

Last, the processor receives some opts.

In the case of suppressed_tokens_processor, we get the list of token ids we want to suppress at opts[:suppressed_token_ids].

If we want to prevent all tokens that contain the letter e, we would have to find the ids of all of them and then pass it into suppressed_tokens_processor as opts.

We then build a tensor in the right shape from those ids.

We need a tensor because that's what enables efficient computations with Nx.

indices = opts[:suppressed_token_ids] |> Nx.tensor() |> Nx.new_axis(-1)

Next, we build a 1 dimensional tensor of the same size as our indices (token ids).

We set each value in this tensor to the lowest number we can find: negative infinity.

values = Nx.broadcast(Nx.Constants.neg_infinity(Nx.type(logits)), {Nx.size(indices)})

If we take those two tensors, the indices and the values, it looks like a table with the token ids we want to suppress and negative infinity. Imagine we would want to suppress the tokens 42 and 53, this is the table we would get.

| Token ID | 42 | 53 |

|---|---|---|

| Value | -∞ | -∞ |

As the last step in suppressed_tokens_processor, we write those values at the indices that correspond to the token ids into the logits and return them.

Nx.indexed_put(logits, indices, values)

Let's say we received the following logits in suppressed_tokens_processor.

| Token ID | ... | 42 | ... | 53 | ... |

|---|---|---|---|---|---|

| Logit | ... | 2.3 | ... | 4.22 | ... |

When we pass [42, 53] as opts to the suppressed_tokens_processor it will transform the logits into the following.

| Token ID | ... | 42 | ... | 53 | ... |

|---|---|---|---|---|---|

| Logit | ... | -∞ | ... | -∞ | ... |

This effectively prevents the token ids 42 and 53 to be selected as next token, as we now have the lowest possible confidence that these should be the next tokens.

Elixir is an excellent choice for applications due to its scalability, fault tolerance, and concurrency model. Its lightweight processes and message-passing architecture make it ideal for orchestrating complex AI workflows efficiently. bitcrowd's first Elixir ML project dates back to 2020, and we have since then enabled various clients to build and scale their AI projects.

bitcrowd is an excellent choice if you need a scalable RAG system or a fully integrated AI pipeline. We help you build, optimize, and maintain it with a focus on reliability and performance.

Drop us a line via email if you want to build your next AI project with Elixir. Or book a call with us to discuss your project.