At the beginning of 2025, the new European Accessibility Act (EAA) 2025 for web accessibility will come into force, requiring many industries and all public bodies to ensure their websites are accessible to all users.

This means that for many products, passing WCAG 2.1 level AA will be a legal requirement. Wherever you are, passing a web accessibility audit normally means passing the WCAG 2.1 level AA, since for once we have a single standard to measure by — the WCAG is the global standard for interactive products.

Many basic accessibility errors can be found by using automated tools. They can’t tell you whether your UI is usable and accessible — you’ll need a human to to tell you that — but they’ll help you avoid breaking your UI for many of your users.

Creating, updating, and maintaining an accessible web app or site is a process, not a single action. It can’t be finished in one go, and checked off your todo list. An accessibility audit helps you get an existing product compliant with the standards and legislation, but to ensure the product doesn’t degrade once the audit is over, you need to put in place a robust process.

Simplify this by using tools and implementing processes to watch your codebase for you, highlighting any accessibility regressions. This post is a list of some tools we use, but doesn’t cover usage of each in detail. Getting the most out of these tools will take knowledge of accessibility issues, and specifically the WCAG guidelines.

At bitcrowd we use this tooling to catch the common low-hanging fruit: invalid HTML and aria usage, inaccessible UI patterns, and color contrast issues — they help us assess and prioritise accessibility failings, so we can bring a product to a good baseline level of accessibility. With the basics covered, our developers can move on to manually test and review the UI they’re creating, giving everyone a great user experience.

Our tech stack

Right now we build frontend projects using React, using Storybook to manage and organise our UI components. We build backends and the associated admin interface with Elixir and LiveView. Our accessibility testing tools fit into this ecosystem, but there will almost certainly be alternatives for whichever tech stack you use.

Static code checks/Linting

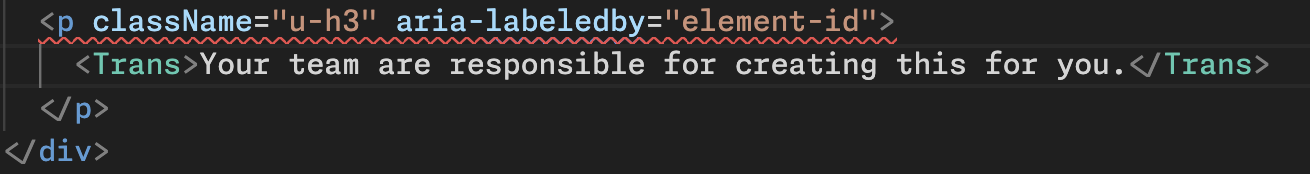

Just like we check for common programming pitfalls in our JavaScript code, linting for accessibility issues in UI components is super useful for flagging problems before they ever get merged to main. While building the UI your editor can be live-linting your code, indicating problems like bad UI patterns, and missing or invalid HTML elements and attributes, as you write them.

The linter can be run in terminal, and is always run on CI for every pull request, alongside tests and dry-run builds. This means a developer can instantly check for issues from the command line, and the CI will stop us before we check-in code that has accessibility issues.

eslint-plugin-jsx-a11y

We use ESLint to lint all our JavaScript and TypeScript, and unsurprisingly for a tool with a huge community, there’s a well-established plugin to lint the HTML in the render function of your JSX component code.

Running ESLint (normally npm run lint) from terminal will now output errors and warnings about UI that fails any of the list of well-known accessibility issues:

Make sure npm run lint is run on CI to get the same error-throwing output on CI. This forces developers to fix the problem, before it’s ever deployed.

If you prefer, your IDE probably has an extension or plugin, that highlights issues as you type. For example, Microsoft published an ESLint extension for VSCode, that tells you when you get it wrong, right in your editor:

A11yAudit

This one is specific to Elixir applications. It’s a static code checker that runs the set of accessibility checks from axe-core on your code, and outputs the results to terminal. This means developers can run the checks locally before opening a PR, and they run automatically on CI, stopping accessibility issues ever reaching main.

Axe-core is the industry-leading accessibility testing engine that’s the backbone of most HTML a11y-testing packages — many of these tools rely on it.

Unlike a linter, A11yAudit is used as part of feature or end-to-end browser tests. Just like we test app functionality in different scenarios, we add specific scenarios in our test suite to make sure the rendered pages don’t have any violations on them.

Manual testing

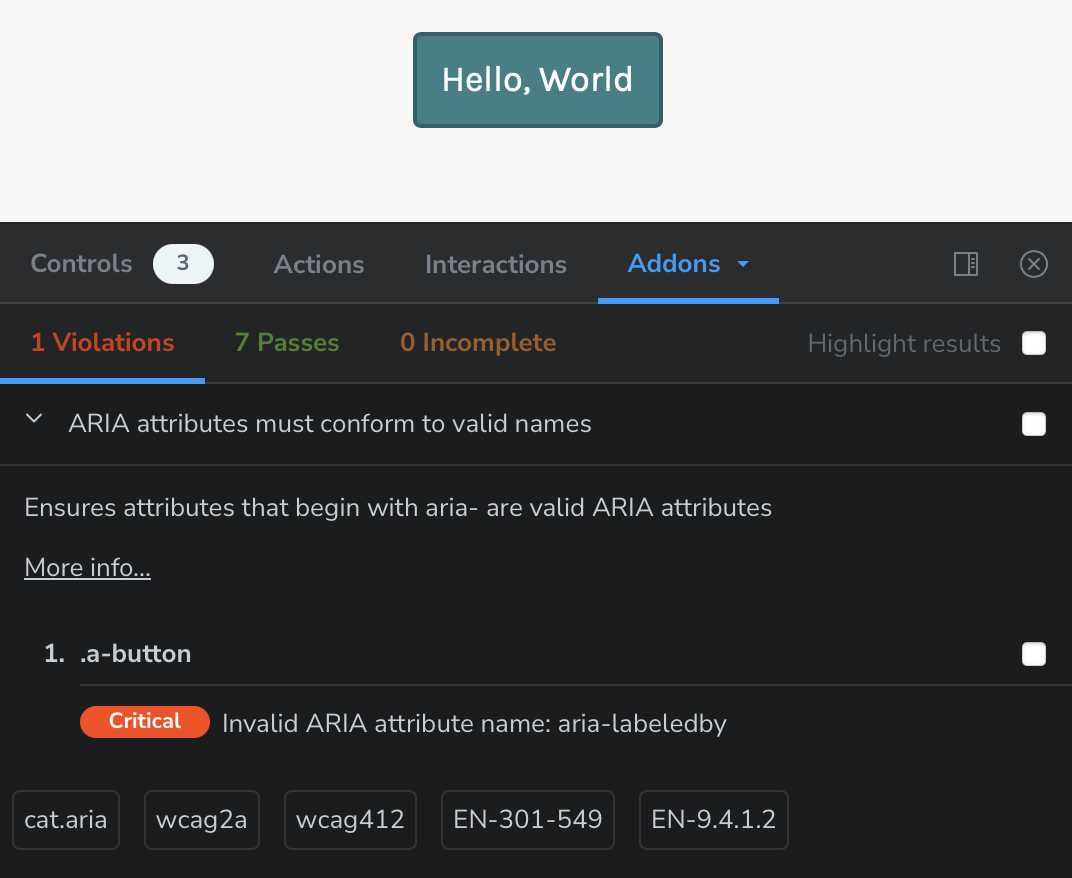

@storybook/addon-a11y

We use storybook as an isolated environment for developing UI components independently of the application. It allows us to parallelise work in the early stages of a project, when we haven’t yet built the screens and functionality that will be populated with these UI components. Addon-a11y is one of the core add-ons provided by the Storybook team, and like A11yAudit mentioned above, it’s powered by axe-core.

The plugin adds an “Accessibility” panel to the storybook UI, flagging up any accessibility violations found in the current component, and showing you what you got right.

When reviewing the functionality and visuals of components and screens in Storybook, get in the habit of reviewing the output of this panel — it’s a great overview, and super convenient.

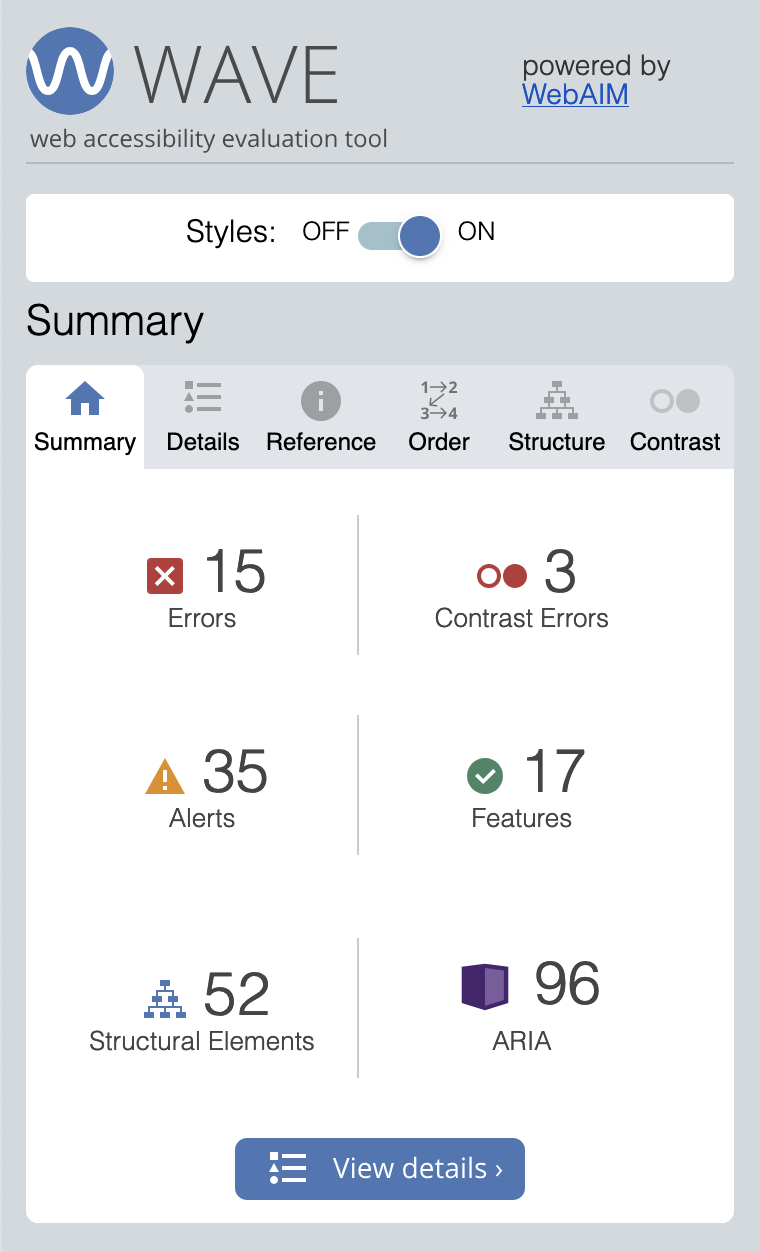

Wave browser extension

The Wave browser extensions from WebAIM are available for every browser, and are great for reviewing the accessibility of screens running in the application or site, outside the storybook environment.

While the other tools mentioned here are aimed at developers building UI components, this browser extension is perhaps best used to assess entire web pages, or entire screens of a web application. It’s usable by non-developers too, but will require knowledge of accessibility and the WCAG.

Here at bitcrowd, we use this while reviewing PRs, and to regularly audit applications while reviewing PRs. After the static code checks of the previous tools are run on isolated components, it’s great to assess your application running as a whole. Using and composing components together in a larger component or screen, while a human clicks and tabs around the UI can raise issues not flagged by static code checks. This is perhaps the end-to-end testing of a11y tests.

Conclusion

By automating the simpler parts of accessibility auditing, we’ve freed up our developers to build better user interfaces. For the issues that need to be manually checked by a human, we use tooling to assist them, making the task more straightforward and productive.

Using these tools, we build UIs that work better for more people, and in more unexpected and non-ideal situations.

Perhaps you find other tools useful for checking for a11y fails? Let us know on one of our socials! Like all of tech, this is a constantly-evolving ecosystem, hopefully one that’s always getting better, enabling us to build better products that are useful to more people, while also avoiding getting sued.