Abstract

This is the second part of a series of blog posts on using a RAG (Retrieval Augmented Generation) information system for your codebase. Together we explore how this can empower your development team. Check out the first post for an introduction into the topic if you haven't already.

In this episode we explore how we can adapt our RAG system for Ruby codebases from the first episode to read and understand Elixir code. We will take a look at LangChain and text "splitting" and "chunking".

Let's dive right into it.

Background

Our RAG system was built with the idea to discover Ruby codebases. In order to have conversations about Elixir codebases as well, we need to make sure our LLM "understands" Elixir code. This is where LangChain comes into play.

LangChain is a toolkit around all things LLMs, including RAG. We use it to parse our documents or codebase and generate a vector database from it. In our simple RAG system, we specify which file endings (.rb) and which programming language (Ruby) our documents have.

The ingestion of programming source code into an LLM with LangChain was initially only supported for Python, C and a few others languages. Then this issue proposed the usage of a parser library like Tree-sitter to facilitate adding support for many more languages. The discussion is worth reading.

Finally, this pull request introduced support for a lot more languages, including Ruby, based on this proposal. It was a school project:

I am submitting this for a school project as part of a team of 5. Other team members are @LeilaChr, @maazh10, @Megabear137, @jelalalamy. This PR also has contributions from community members @Harrolee and @Mario928.

Our plan is to use this as a starting point to enable basic parsing of Elixir source code with LangChain. With that, we should be able to have conversations with our RAG system about Elixir codebases as well.

Splitting / Chunking text

To read our Elixir codebase, the parser needs some rules on where to split the provided source code files at. Generally for RAG, when ingesting (reading in) a text file, PDF, etc. it will try to split it into chunks, ideally along it's semantic meaning. In text documents, the meanings are often grouped along:

- chapters

- paragraphs

- sentences

- words

If your embedding model has enough context capacity, you would try to split along chapters or paragraphs, because human readable text often groups meanings that way. If those are too big, you would try to break between sentences, and, as a last resort, words. One would generally try to avoid splitting inside words. Take for instance "sense" and "nonsense", which carry quite a different meaning.

Splitting / Chunking code

Embedding code is a bit underdeveloped, but the strategy is to break the code into pieces by inserting new lines so that it looks a bit more like natural text, and then let the embedding model deal with the task of making sense (inferring meaning) from it. Interestingly, the models trained on that task do that surprisingly well.

As said, LangChain has dedicated document loaders for source code and a guide on how to add new ones based on Tree-sitter. So we went ahead and implemented a document loader and parser for Elixir source code in LangChain. It only covers the core basics of the language, but it was already enough for our proof-of-concept RAG application. With LangChain now supporting Elixir out of the box, people can use the parser in a variety of different scenarios and will come up with ways to improve it to fit more use cases. Our implementation is only the ground work. You can have a look at the PR if you're interested in what's necessary to add parsing support for another programming language in LangChain. Spoiler: not much if you can utilize Tree-sitter.

The core of LangChain's programming language parsers based on Tree-sitter is their CHUNK_QUERY. For our Elixir parser it looks like this:

CHUNK_QUERY = """

[

(call target: ((identifier) @_identifier

(#any-of? @_identifier "defmodule" "defprotocol" "defimpl"))) @module

(call target: ((identifier) @_identifier

(#any-of? @_identifier "def" "defmacro" "defmacrop" "defp"))) @function

(unary_operator operator: "@" operand: (call target: ((identifier) @_identifier

(#any-of? @_identifier "moduledoc" "typedoc""doc")))) @comment

]

""".strip()

We are using Tree-sitter's own tree query language here. Without diving into the details, our query makes sure to distinguish top level modules, functions and comments. The document loader will then take care of loading each chunk into a separate document and split the lines accordingly. The approach is the same for all programming languages.

Test drive

Let's take this for a spin in our RAG system scripts from episode one of this series.

Just as a refresher: the idea is to have a RAG system for your team's codebase using LLMs locally without exchanging any data with third parties like OpenAI and the like. It includes a conversational AI built with Chainlit, so that members of the team can "chat" with the LLM about the codebase, for instance to get information about the domain or where to find things for the ticket they are working on.

For testing purposes we will use our RAG system on a popular open source Elixir package, the Phoenix Framework.

Get the RAG ready

First we need to get our local RAG system ready for operating on an Elixir codebase. It needs to know:

- Where is the code?

- Which programming language is it?

- Which suffixes have the source code files?

We provide this information via environment variables in a .env file:

OLLAMA_MODEL="llama3:8b"

CODEBASE_PATH="./phoenix"

CODEBASE_LANGUAGE="elixir"

CODE_SUFFIXES=".ex, .exs"

We just cloned the current state of the Phoenix Git repository right next to our RAG code. We also keep using Meta's Llama3 model, and instruct the document loader to look at Elixir files.

At the time of testing our PR on LangChain was not released yet. So we were pointing to our fork's local code for the langchain, langchain-community and langchain-text-splitter Python packages from the requirements.txt file.

For the sake of simplicity we assume the project documentation to be in Markdown and hard-coded this information into our code ingestion logic.

With that, we can set up our vector database for the Phoenix codebase:

python ingest-code.py

Then we start the chat bot:

chainlit run main.py

Chatbot: Hi, Welcome to Granny RAG. Ask me anything about your code!

Now we are ready to have a conversation about the codebase.

Ask questions

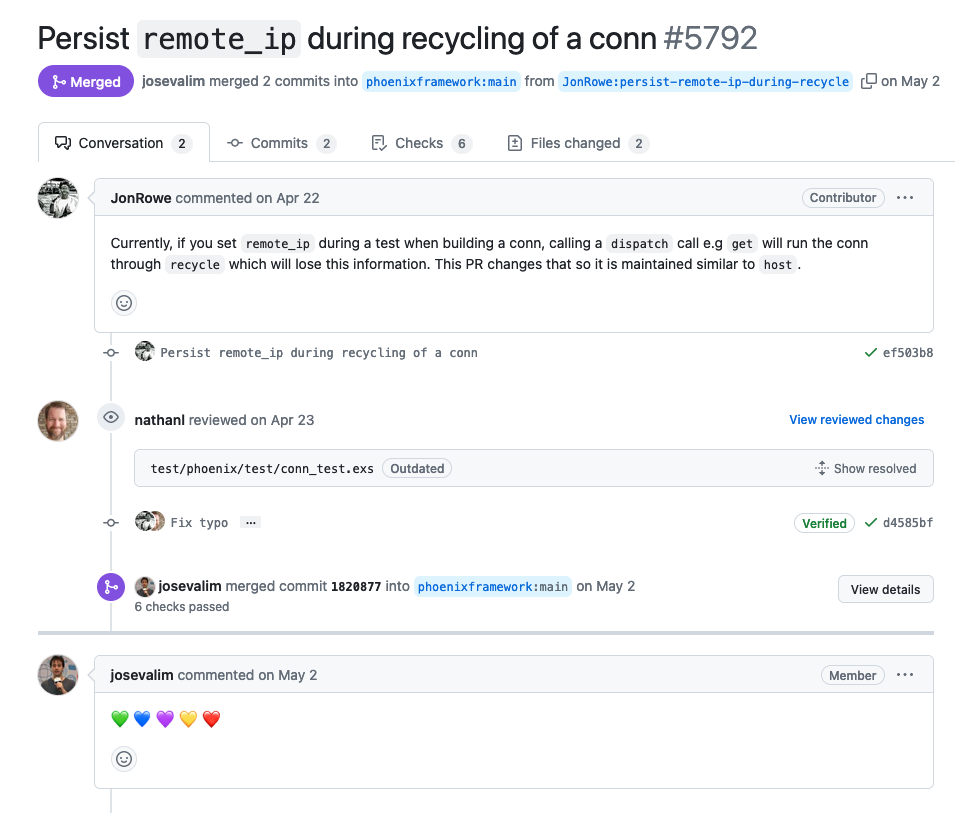

Let’s take an original PR from Phoenix to evaluate the quality of our solution, similar to what we did with Ruby in the previous post.

The pull request fixes a small issue in the recycle/1 function of the Phoenix.ConnTest module at phoenix/lib/phoenix/test/conn_test.ex:

Currently, if you set

remote_ipduring a test when building a conn, calling a dispatch call e.g get will run the conn throughrecyclewhich will lose this information. This PR changes that so it is maintained similar tohost.

We reset our clone of the Phoenix repository (and our vector database) to the state right before the PR was merged and then ask the RAG system for help with the issue:

Understand the problem

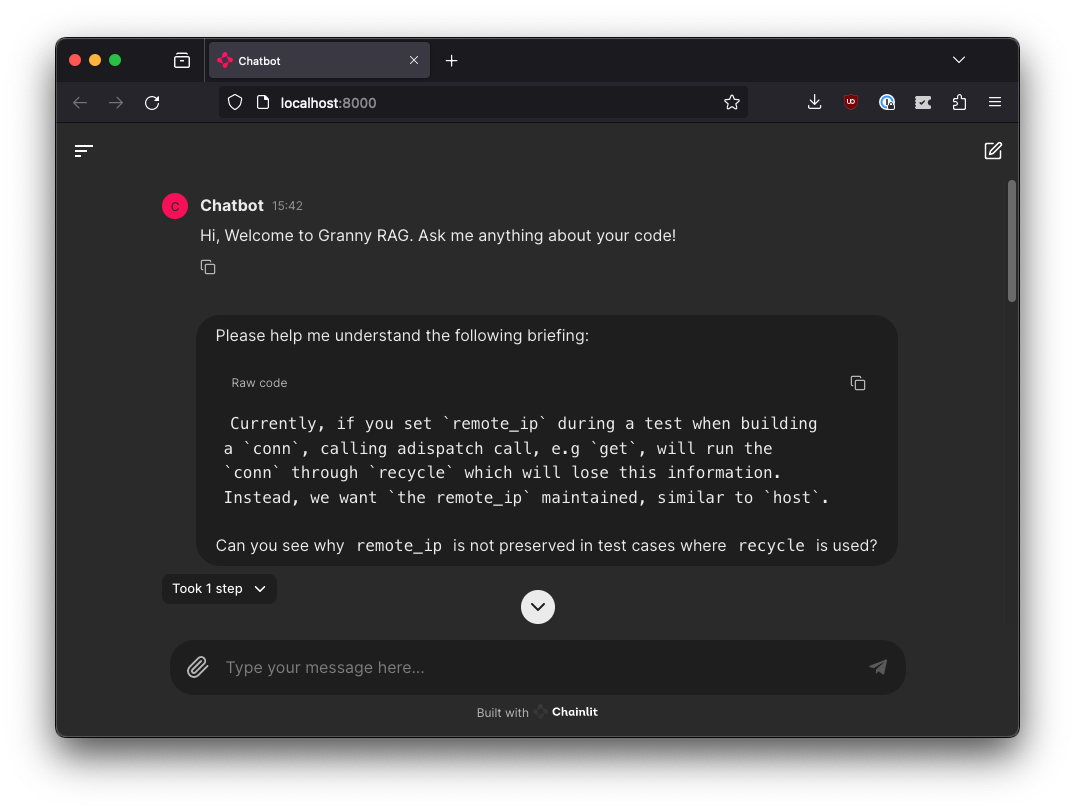

We: Please help me understand the following briefing:

Currently, if you set `remote_ip` during a test when building a `conn`,

calling > a dispatch call, e.g `get`, will run the `conn` through `recycle`

which will > lose this information. Instead, we want `the remote_ip`

maintained, similar to `host`.Can you see why

remote_ipis not preserved in test cases whererecycleis used?

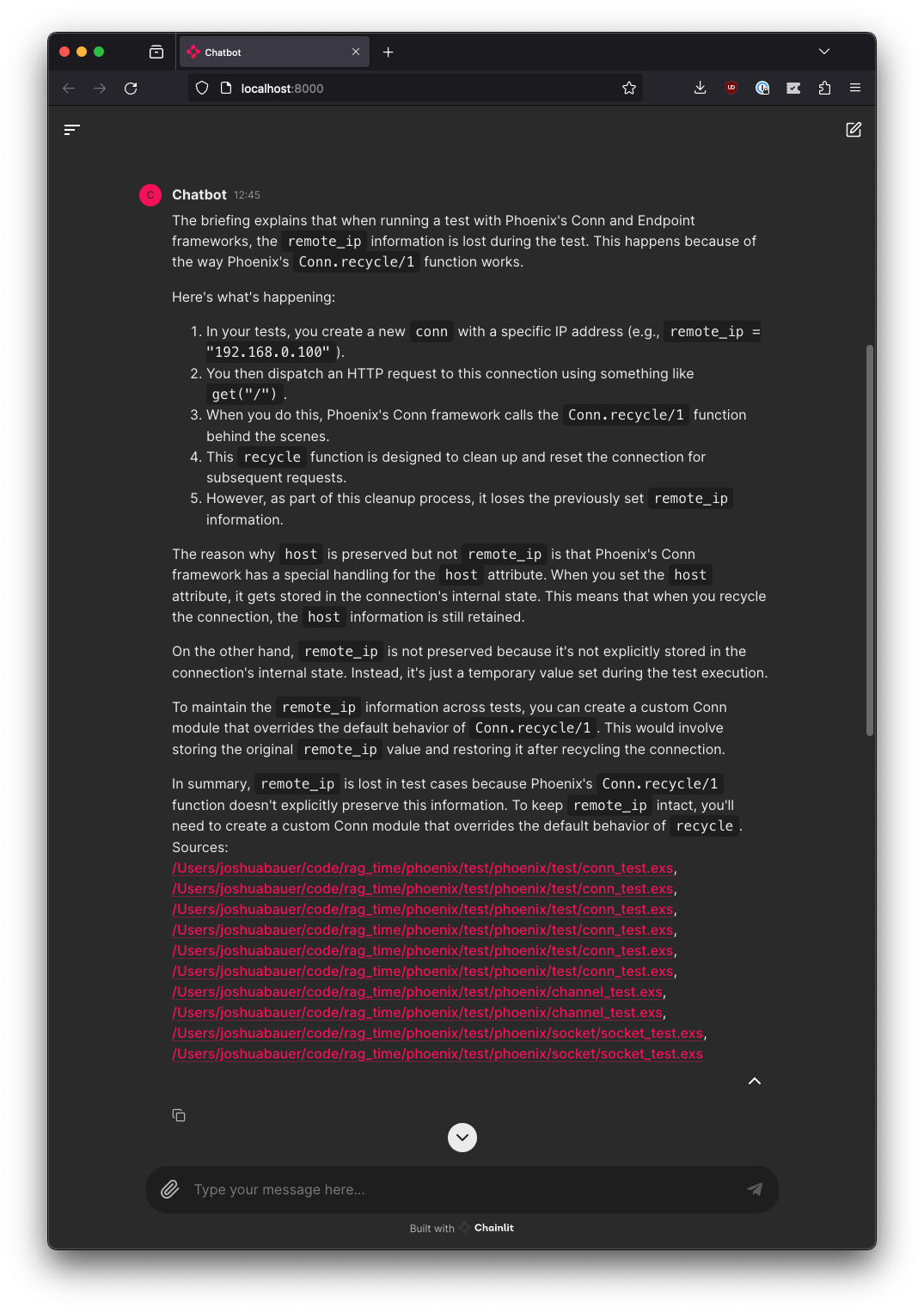

Chatbot: The briefing explains that when running a test with Phoenix's Conn and Endpoint frameworks, the

remote_ipinformation is lost during the test. This happens because of the way Phoenix'sConn.recycle/1function works. Here's what's happening:

- In your tests, you create a new

connwith a specific IP address (e.g.,remote_ip = "192.168.0.100").- You then dispatch an HTTP request to this connection using something like

get("/").- When you do this, Phoenix's Conn framework calls the

Conn.recycle/1function behind the scenes.- This

recyclefunction is designed to clean up and reset the connection for subsequent requests.- However, as part of this cleanup process, it loses the previously set

remote_ipinformation.The reason why

hostis preserved but notremote_ipis that Phoenix's Conn framework has a special handling for thehostattribute. When you set thehostattribute, it gets stored in the connection's internal state. This means that when you recycle the connection, thehostinformation is still retained.On the other hand,

remote_ipis not preserved because it's not explicitly stored in the connection's internal state. Instead, it's just a temporary value set during the test execution. To maintain theremote_ipinformation across tests, you can create a custom Conn module that overrides the default behavior ofConn.recycle/1. This would involve storing the originalremote_ipvalue and restoring it after recycling the connection.In summary,

remote_ipis lost in test cases because Phoenix'sConn.recycle/1function doesn't explicitly preserve this information. To keepremote_ipintact, you'll need to create a custom Conn module that overrides the default behavior ofrecycle.Sources: /Users/joshuabauer/code/rag_time/phoenix/test/phoenix/test/conn_test.exs, […]

Implement a solution

This would give us a hint on how to solve the issue in our app if we were "using" the Phoenix framework. But let's say we want to fix the issue "for good" in the framework itself. We now know the Conn.recycle/1 function is what we're interested in. So we look at the listed sources, search for the recycle/1 function in the code and find ConnTest/recycle/1 as the test-conn equivalent to Conn.recycle/1. This is pretty much already be the "custom Conn module" the RAG was referring to and it's just used for tests. And after all, we only want to change the behavior for tests. So let's ask the RAG what to do with it:

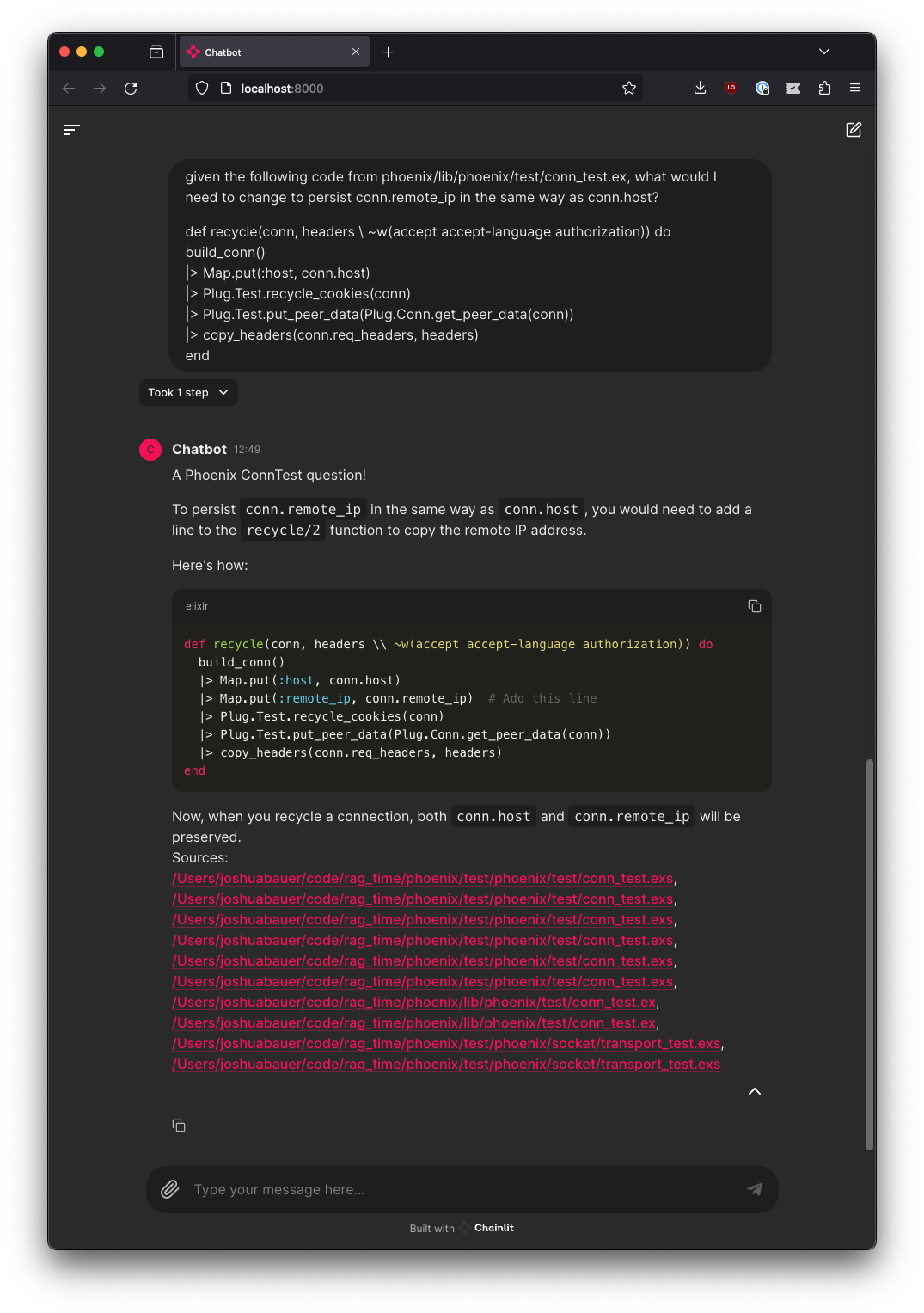

We: Given the following code from

phoenix/lib/phoenix/test/conn_test.ex, what would I need to change to persistconn.remote_ipin the same way asconn.host?def recycle(conn, headers \\ ~w(accept accept-language authorization)) do

build_conn()

|> Map.put(:host, conn.host)

|> Plug.Test.recycle_cookies(conn)

|> Plug.Test.put_peer_data(Plug.Conn.get_peer_data(conn))

|> copy_headers(conn.req_headers, headers)

end

Chatbot: A Phoenix ConnTest question!

To persist

conn.remote_ipin the same way asconn.host, you would need to add a line to therecycle/2function to copy the remote IP address.Here's how:

def recycle(conn, headers \\ ~w(accept accept-language authorization)) do

build_conn()

|> Map.put(:host, conn.host)

|> Map.put(:remote_ip, conn.remote_ip) # Add this line

|> Plug.Test.recycle_cookies(conn)

|> Plug.Test.put_peer_data(Plug.Conn.get_peer_data(conn))

|> copy_headers(conn.req_headers, headers)

endNow, when you recycle a connection, both

conn.hostandconn.remote_ipwill be preserved.Sources: […]

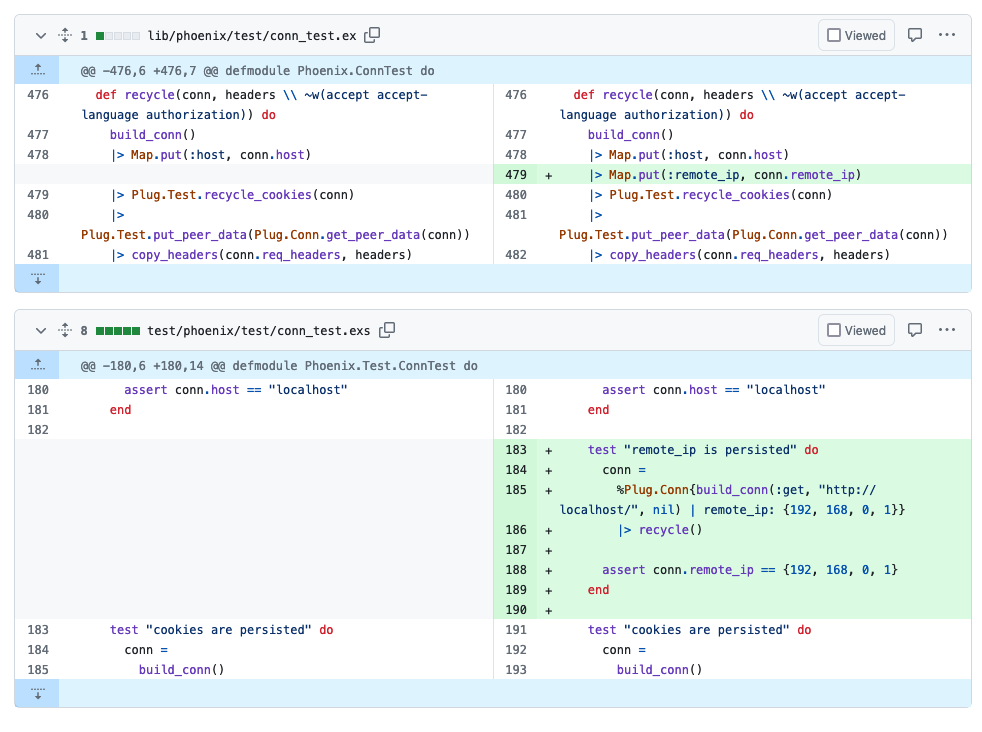

Compare solution

Looking at the PR's file changes, this is exactly what the person came up with:

So the conversation with our Elixir RAG was quite helpful for guiding us through the code and finding an adequate solution to the problem.

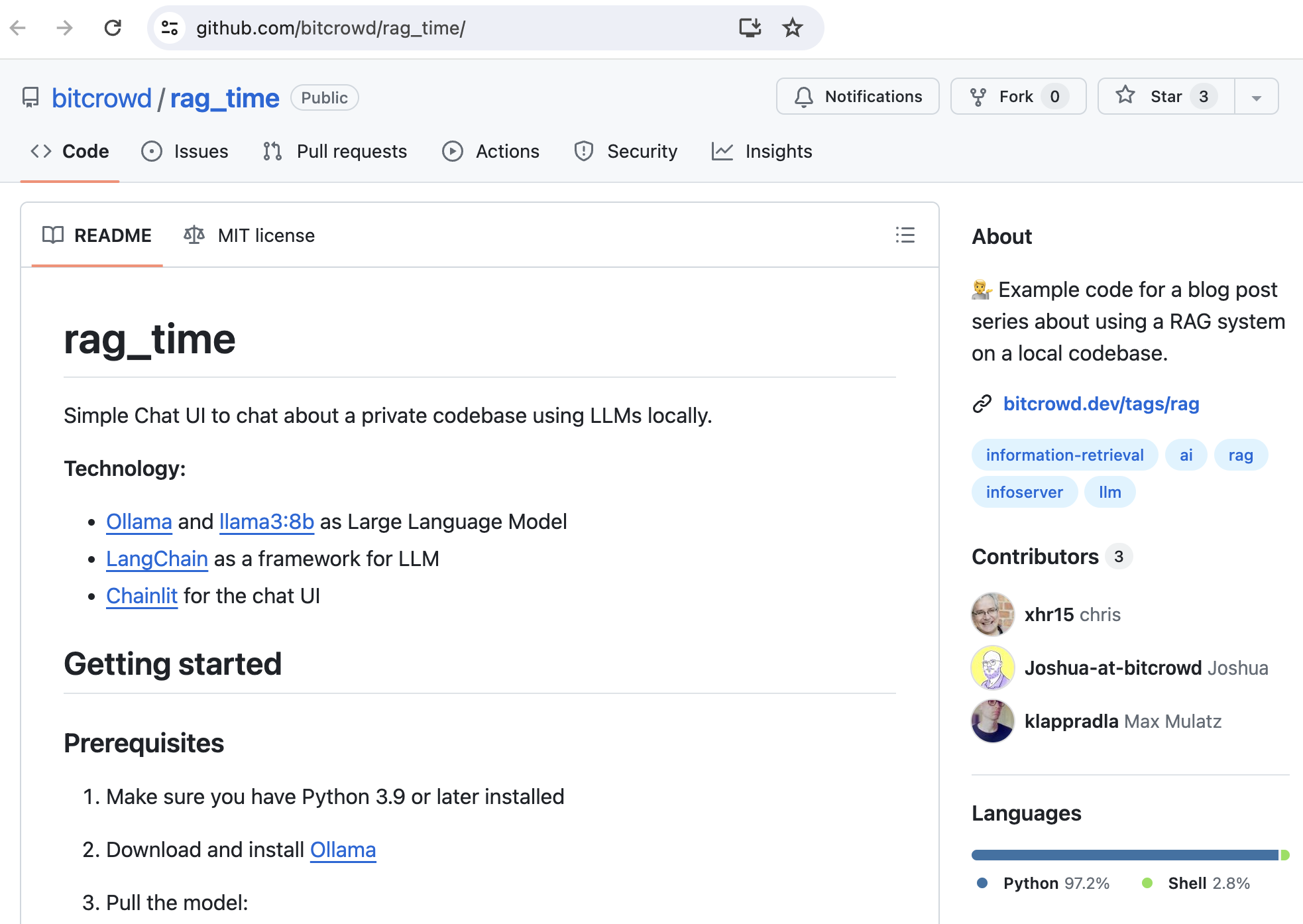

Try it yourself!

It is really easy! Just clone our repo, follow the README and tell the script where to find your codebase:

CODEBASE_PATH="./path-to-my-elixir-codebase"

CODEBASE_LANGUAGE="elixir"

CODE_SUFFIXES=".ex, .exs"

We kept the scripts basic, so that they are easy to understand and extend. Depending on your codebase, the results might not always be perfect, but often surprisingly good.

Outlook

In this post we saw how we can extend a simple off-the-shelf system to better fit the needs of our dev team. We enabled our RAG system to read and understand Elixir code! Text splitting and chunking is just one possible example of where to start when it comes to adjusting a RAG system for your specific needs. What we got is already quite useful, but it's definitely still lacking precision.

We will explore possibilities for further improvements and fine tuning in the next episodes of this blog post series.

Or, if you canʼt wait, give the team at bitcrowd a shout via granny-rag@bitcrowd.net or book a consulting call here.